There are various ways to say it, but they all contain the word “memory” and they all mean essentially the same thing. Computers have memory. This is not to be confused with storage. Storage is where data is stored for longer term, such as on a hard disk, floppy disk, tape, CD, DVD, flash drive, or other external, long term data storage technology.

There are various ways to say it, but they all contain the word “memory” and they all mean essentially the same thing. Computers have memory. This is not to be confused with storage. Storage is where data is stored for longer term, such as on a hard disk, floppy disk, tape, CD, DVD, flash drive, or other external, long term data storage technology.

Memory is not the same thing as storage. Memory is electronic and most kinds of memory require a live electrical source to retain its data. If the power is cut off, the data in memory is lost almost instantly. Memory is also MUCH faster than storage. It’s also MUCH more expensive per byte. As such, storage is usually MUCH larger in capacity than memory.

and most kinds of memory require a live electrical source to retain its data. If the power is cut off, the data in memory is lost almost instantly. Memory is also MUCH faster than storage. It’s also MUCH more expensive per byte. As such, storage is usually MUCH larger in capacity than memory.

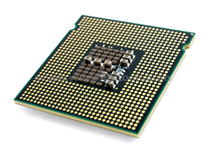

Computer processors cannot execute programs stored on storage directly. Programs must first be copied from storage into memory before the CPU can transfer control to the instructions in the program. Data that a program uses, whether it’s a word processing document, a spreadsheet, a photo for editing, or anything else, must be copied from storage into the computer’s memory before the CPU can see and work with the data.

Computer processors cannot execute programs stored on storage directly. Programs must first be copied from storage into memory before the CPU can transfer control to the instructions in the program. Data that a program uses, whether it’s a word processing document, a spreadsheet, a photo for editing, or anything else, must be copied from storage into the computer’s memory before the CPU can see and work with the data.

In computer technology, memory is the electrical hardware that stores data. This data is in the form of electrical charges. These charges are either there or aren’t. A single charge (or lack there of) is called a bit. Multiple bits are grouped together in groups of 8 bits. A group of 8 bits is called a byte. Most processors are designed to be able to read or write one byte at a time. In fact, every byte in a computer’s memory has its own address, similar to a postal address on a mailbox on a street lined with houses. A single bit generally represents a value of 1 (a charge) or 0 (no charge). So a single bit can hold exactly one value (either 1 or 0) and no more. It cannot hold a value of 2 or 3 or anything else. 2 bits can hold a single value between 0 and 3 inclusive (1 value out of 4 possibilities (0, 1, 2, 3)) because with 2 bits of on and off charges, there are 4 possible combinations. Every time you add a bit, you double the amount of possibilities, so 3 bits can represent a number between 0 and 5 (6 possibilities). 4 bits (called a nibble) can represent a number as large as 15 (16 possible outcomes). 8 bits (or a byte) can hold one of 256 different possible combinations of 1’s and 0’s. The largest number that can be represented in a byte is 255. 2 bytes (16 bits, called a word) can represent a number as large as 65,535 (65,536 combinations of 1’s and 0’s). 3 bytes (24 bits) can represent a number as large as about 16.7 million. 4 bytes (32 bits, called a dword for “double word”) can represent a number as large as about 4.2 billion.

In computer technology, memory is the electrical hardware that stores data. This data is in the form of electrical charges. These charges are either there or aren’t. A single charge (or lack there of) is called a bit. Multiple bits are grouped together in groups of 8 bits. A group of 8 bits is called a byte. Most processors are designed to be able to read or write one byte at a time. In fact, every byte in a computer’s memory has its own address, similar to a postal address on a mailbox on a street lined with houses. A single bit generally represents a value of 1 (a charge) or 0 (no charge). So a single bit can hold exactly one value (either 1 or 0) and no more. It cannot hold a value of 2 or 3 or anything else. 2 bits can hold a single value between 0 and 3 inclusive (1 value out of 4 possibilities (0, 1, 2, 3)) because with 2 bits of on and off charges, there are 4 possible combinations. Every time you add a bit, you double the amount of possibilities, so 3 bits can represent a number between 0 and 5 (6 possibilities). 4 bits (called a nibble) can represent a number as large as 15 (16 possible outcomes). 8 bits (or a byte) can hold one of 256 different possible combinations of 1’s and 0’s. The largest number that can be represented in a byte is 255. 2 bytes (16 bits, called a word) can represent a number as large as 65,535 (65,536 combinations of 1’s and 0’s). 3 bytes (24 bits) can represent a number as large as about 16.7 million. 4 bytes (32 bits, called a dword for “double word”) can represent a number as large as about 4.2 billion.

Most modern processors have an 8 byte (64 bit) memory  address register. This means that the processor can store something like a zip code, made out of 8 bytes (64 bits) to represent a single address to a single byte of memory. With an address size as large as 8 bytes, this means the CPU can uniquely address up to 64^2 addresses (9,223,372,036,854,775,807 * 2 (sorry, my calculator doesn’t go that far)). That’s about 18.4 Septillion bytes of memory!!! Now, just because the CPU can address that much memory, doesn’t mean that it has that much memory. As of Late 2009, Most modern PCs don’t have much more than about 4GB (4 Gigabytes (4.2 billion bytes)) of memory.

address register. This means that the processor can store something like a zip code, made out of 8 bytes (64 bits) to represent a single address to a single byte of memory. With an address size as large as 8 bytes, this means the CPU can uniquely address up to 64^2 addresses (9,223,372,036,854,775,807 * 2 (sorry, my calculator doesn’t go that far)). That’s about 18.4 Septillion bytes of memory!!! Now, just because the CPU can address that much memory, doesn’t mean that it has that much memory. As of Late 2009, Most modern PCs don’t have much more than about 4GB (4 Gigabytes (4.2 billion bytes)) of memory.

Memory is measured in bytes, not bits. The standard measurements are in thousands of bytes (actually units of 1024 bytes) called KiloBytes (KB for short… and that MUST BE capitalized!!!!, otherwise, it’s bits, an EIGHT FOLD reduction in the amount you’re talking about!!!). If it gets into the millions of bytes, it’s measured in MegaBytes (MB, again, uppercase). 1MB is 1024 KB or 1024*1024 bytes. If it gets into the billions, it’s measured in GigaBytes (GB, again, uppercase). 1 GB is 1024 MB or 1024*1024*1024 bytes. Next up from that is TerraBytes (trillions of bytes). We’re still a few years out from having PCs with TBs of memory. We do already have TB hard drives, but that’s not “memory”. A modern PC as of this writing has typically between 2 – 6 GB of memory. Few PCs have the capacity for more than 16GB at this time. This, of course, as always, will be changing as the future unfolds.

Memory is measured in bytes, not bits. The standard measurements are in thousands of bytes (actually units of 1024 bytes) called KiloBytes (KB for short… and that MUST BE capitalized!!!!, otherwise, it’s bits, an EIGHT FOLD reduction in the amount you’re talking about!!!). If it gets into the millions of bytes, it’s measured in MegaBytes (MB, again, uppercase). 1MB is 1024 KB or 1024*1024 bytes. If it gets into the billions, it’s measured in GigaBytes (GB, again, uppercase). 1 GB is 1024 MB or 1024*1024*1024 bytes. Next up from that is TerraBytes (trillions of bytes). We’re still a few years out from having PCs with TBs of memory. We do already have TB hard drives, but that’s not “memory”. A modern PC as of this writing has typically between 2 – 6 GB of memory. Few PCs have the capacity for more than 16GB at this time. This, of course, as always, will be changing as the future unfolds.

These bits of data held in the computer’s memory can represent anything from a program (like notepad or your web browser) to photos of your cat, to videos of your kids, to spreadsheets or anything else you use on your computer.

It’s time for acronyms:

RAM: Random Access Memory. This is memory that can be both read from and written to. Your computer’s memory is RAM.

ROM: Read Only Memory. It’s like RAM, but it can’t be changed. An example of ROM is the program for a game in an arcade machine. Take Pac-Man for example. The program code is written to a ROM chip, so it can’t be changed. That chip is installed in an arcade machine so each time it’s powered on, it loads the same program into RAM. It never gets corrupted.

DRAM: Dynamic RAM. A type of RAM that needs to be continually refreshed with electrical charge so that it doesn’t lose its data rapidly.

SRAM: Static RAM. This is RAM that doesn’t need to be recharged frequently. It can retain its data without a continuous electrical source.

SDRAM: Synchronous DRAM. This type of memory waits for a clock signal before responding to requests from the CPU for data. This keeps things synchronized for certain purposes.

Mis-uses. Please, for the love of all that is good in the world, NEVER say “RAM Memory”!!! As you now know, the “M” in RAM stands for “Memory”. Saying “RAM Memory” is the equivalent of saying “Random Access Memory Memory”. It’s like fingernails down a chalk board to the tech-educated. Just saying “RAM” is more than sufficient.